Output a scalar:

Linear regression:

y = W x + b = ∑ i = 1 n w i x i + b y=Wx+b=\sum\limits_{i=1}^n{w_ix_i}+b y = W x + b = i = 1 ∑ n w i x i + b L = ∑ i = 1 n ( y i − y ^ i ) 2 L=\sum\limits_{i=1}^n(y_i-\hat{y}_i)^2 L = i = 1 ∑ n ( y i − y ^ i ) 2

Polynomial regression:

y = ∑ i = 1 n w i x i + b y=\sum\limits_{i=1}^n{w_ix^i}+b y = i = 1 ∑ n w i x i + b

Logistic regression (output probability):

y = σ ( W x + b ) = 1 1 + e − ∑ i = 1 n w i x i − b y=\sigma(Wx+b)=\frac{1}{1+e^{-\sum\limits_{i=1}^n{w_ix_i}-b}} y = σ ( W x + b ) = 1 + e − i = 1 ∑ n w i x i − b 1 L = − ∑ i = 1 n y i log ( y ^ i ) L=-\sum\limits_{i=1}^n{y_i\log(\hat{y}_i)} L = − i = 1 ∑ n y i log ( y ^ i )

If model can't even fit training data,

then model have large bias (underfitting).

If model can fit training data but not testing data,

then model have large variance (overfitting).

To prevent underfitting, we can:

Add more features as input.

Use more complex and flexible model.

More complex model does not always lead to better performance

on testing data or new data.

Model Training Error Testing Error x x x 31.9 35.0 x 2 x^2 x 2 15.4 18.4 x 3 x^3 x 3 15.3 18.1 x 4 x^4 x 4 14.9 28.2 x 5 x^5 x 5 12.8 232.1

A extreme example,

such function obtains 0 0 0

f ( x ) = { y i , ∃ x i ∈ X random , otherwise \begin{align*}

f(x)=\begin{cases}

y_i, & \exists{x_i}\in{X} \\

\text{random}, & \text{otherwise}

\end{cases}

\end{align*} f ( x ) = { y i , random , ∃ x i ∈ X otherwise To prevent overfitting, we can:

More training data.

Data augmentation: crop, flip, rotate, cutout, mixup.

Constrained model:

Less parameters, sharing parameters.

Less features.

Early stopping.

Dropout.

Regularization.

L ( w ) = ∑ i = 1 n ( y i − y ^ i ) 2 + λ ∑ i = 1 n w i 2 w t + 1 = w t − η ∇ L ( w ) = w t − η ( ∂ L ∂ w + λ w t ) = ( 1 − η λ ) w t − η ∂ L ∂ w ( Regularization: Weight Decay ) \begin{split}

L(w)&=\sum\limits_{i=1}^n(y_i-\hat{y}_i)^2+\lambda\sum\limits_{i=1}^n{w_i^2}\\

w_{t+1}&=w_t-\eta\nabla{L(w)}\\

&=w_t-\eta(\frac{\partial{L}}{\partial{w}}+\lambda{w_t})\\

&=(1-\eta\lambda)w_t-\eta\frac{\partial{L}}{\partial{w}}

\quad (\text{Regularization: Weight Decay})

\end{split} L ( w ) w t + 1 = i = 1 ∑ n ( y i − y ^ i ) 2 + λ i = 1 ∑ n w i 2 = w t − η ∇ L ( w ) = w t − η ( ∂ w ∂ L + λ w t ) = ( 1 − η λ ) w t − η ∂ w ∂ L ( Regularization: Weight Decay )

Binary classification:

y = δ ( W x + b ) y=\delta(Wx+b) y = δ ( W x + b ) L = ∑ i = 1 n δ ( y i ≠ y ^ i ) L=\sum\limits_{i=1}^n\delta(y_i\ne\hat{y}_i) L = i = 1 ∑ n δ ( y i = y ^ i )

Multi-class classification:

y = softmax ( W x + b ) y=\text{softmax}(Wx+b) y = softmax ( W x + b ) L = − ∑ i = 1 n y i log ( y ^ i ) L=-\sum\limits_{i=1}^n{y_i\log(\hat{y}_i)} L = − i = 1 ∑ n y i log ( y ^ i )

Non-linear model:

Deep learning: y = softmax ( ReLU ( W x + b ) ) y=\text{softmax}(\text{ReLU}(Wx+b)) y = softmax ( ReLU ( W x + b ))

Support vector machine (SVM): y = sign ( W x + b ) y=\text{sign}(Wx+b) y = sign ( W x + b )

Decision tree: y = vote ( leaves ( x ) ) y=\text{vote}(\text{leaves}(x)) y = vote ( leaves ( x ))

K-nearest neighbors (KNN): y = vote ( neighbors ( x ) ) y=\text{vote}(\text{neighbors}(x)) y = vote ( neighbors ( x ))

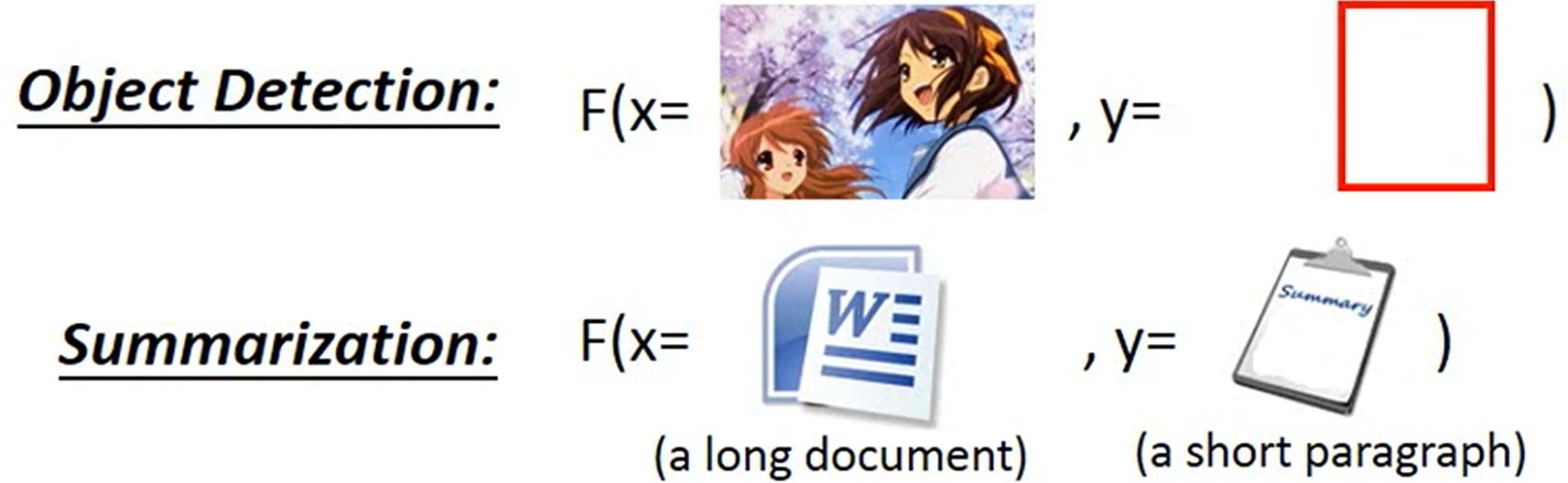

Find a function F F F

F : X × Y → R F:X\times{Y}\to{R} F : X × Y → R F ( x , y ) F(x, y) F ( x , y ) y y y x x x

Given an object x x x

y ~ = arg max y ∈ Y F ( x , y ) \tilde{y}=\arg\max\limits_{y\in{Y}}F(x, y) y ~ = arg y ∈ Y max F ( x , y )

Evaluation: what does F ( X , y ) F(X, y) F ( X , y )

Inference: how to solve arg max \arg\max arg max

Training: how to find F ( x , y ) F(x, y) F ( x , y )

F ( x , y ) = ∑ i = 1 n w i ϕ i ( x , y ) = [ w 1 w 2 w 3 ⋮ w n ] ⋅ [ ϕ 1 ( x , y ) ϕ 2 ( x , y ) ϕ 3 ( x , y ) ⋮ ϕ n ( x , y ) ] = W ⋅ Φ ( x , y ) \begin{split}

F(x, y)&=\sum\limits_{i=1}^n{w_i\phi_i(x, y)} \\

&=\begin{bmatrix}w_1\\w_2\\w_3\\\vdots\\w_n\end{bmatrix}\cdot

\begin{bmatrix}\phi_1(x, y)\\\phi_2(x, y)\\\phi_3(x, y)\\\vdots\\\phi_n(x, y)\end{bmatrix}\\

&=W\cdot\Phi(x, y)

\end{split} F ( x , y ) = i = 1 ∑ n w i ϕ i ( x , y ) = w 1 w 2 w 3 ⋮ w n ⋅ ϕ 1 ( x , y ) ϕ 2 ( x , y ) ϕ 3 ( x , y ) ⋮ ϕ n ( x , y ) = W ⋅ Φ ( x , y )